Dissecting Go’s Benchmarking Framework

Image by Gemini

Table of contents

- Introduction

- A benchmark that looks correct, but isn’t

- Conclusion? Not quite

- The Physics of a Memory Write

- What went wrong in a SIMPLE benchmark?

- Who Actually Controls a Go Benchmark?

- Discovery: Scanning for Candidates

- Context Initialization: More Than a Counter

- Iteration Selection: The Framework’s Choice

- The Timing Trap: External Boundaries

- Summary: The Control Boundary

- Go’s Execution of Benchmarks

- 1. Discovery

- 2. Running Root Benchmark

- 3. Running User Benchmark

- 4. Initial Verification: run1

- 5. Benchmark Loop: run

- 6. Predicting Iterations

- 7. Result Collection

- 8. High Precision Timing

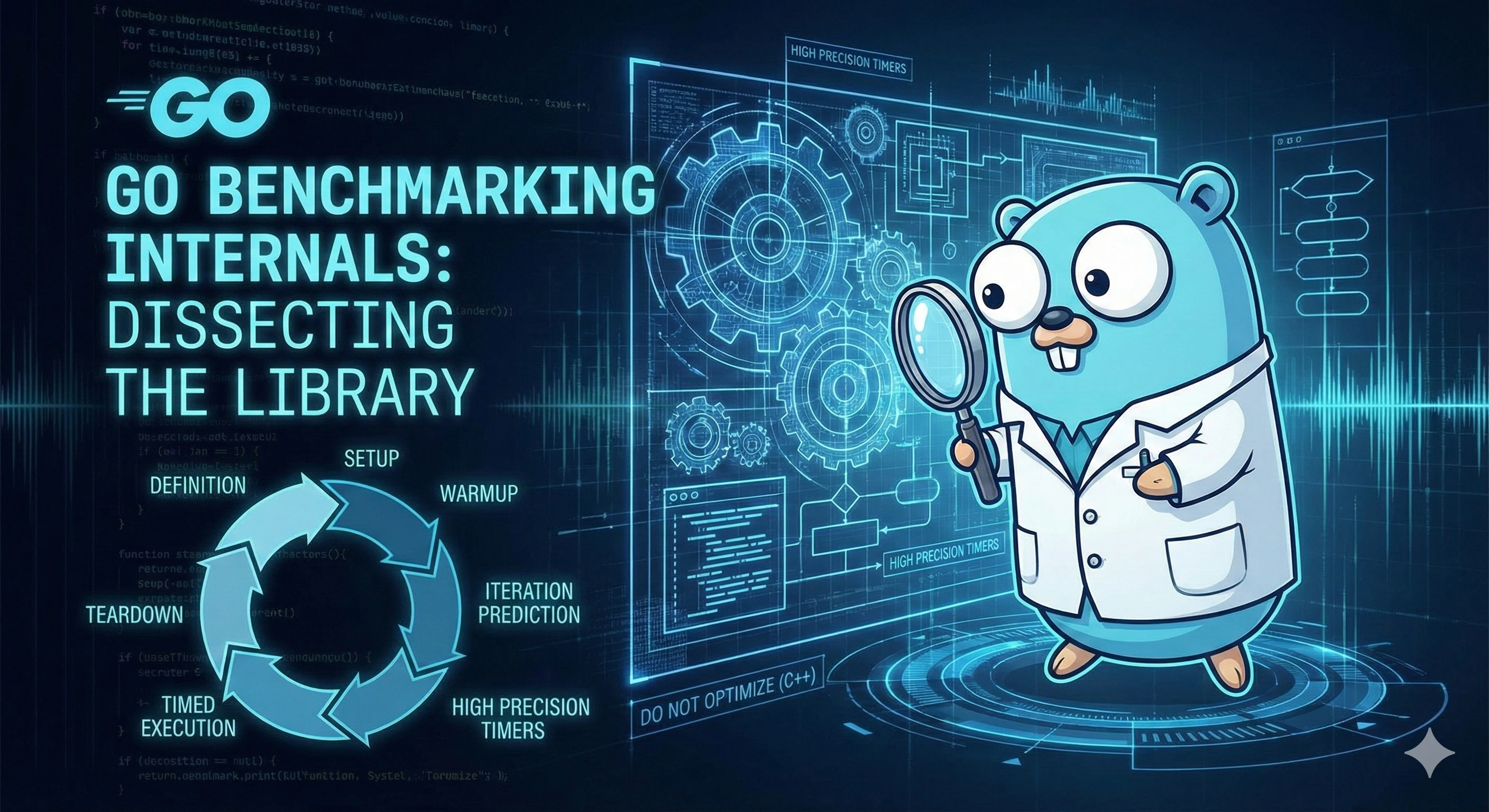

- Building Blocks of a Benchmarking Framework

- 1. Definition

- 2. Setup and teardown boundaries

- 3. Iteration discovery (incidental warmup)

- 4. Iteration prediction

- 5. High-precision timing

- 6. Result aggregation and reporting

- 7. DoNotOptimize

- 8. Explicit non-goals

- Summary

- What you can do after understanding the internals

Introduction

Benchmarking is a critical aspect of software engineering, especially when performance is a key requirement. Go provides a robust benchmarking framework built directly into its standard library’s testing package. It helps developers measure the performance of their code, identify bottlenecks, and verify optimizations. However, writing accurate benchmarks requires a good understanding of how the framework works internally and how the Go compiler optimizes code.

In this post, we’ll dissect the Go benchmarking framework, explore its lifecycle, and understand the building blocks of a micro-benchmarking framework. We will cover:

- A case study of a deceptive benchmark

- Go’s benchmark source code and lifecycle

- High-precision timers

- Controlling compiler optimizations (similar to

DoNotOptimizein C++) - Building blocks of a benchmarking framework

A benchmark that looks correct, but isn’t

Let’s look at a simple example that tries to measure the cost of an addition operation involving two integers. We have a simple add function and a benchmark function that calls it b.N times.

package main

import "testing"

func BenchmarkAdd(b *testing.B) {

for i := 0; i < b.N; i++ {

add(20, 20)

}

}

func add(a, b int) int {

return a + b

}At first glance, this looks like a perfectly valid benchmark. We iterate b.N times and call the function we want to measure. However, if you run this benchmark, you might see a result that is suspiciously fast, perhaps a fraction of a nanosecond per operation.

Note: All benchmarks in this post were run on an Apple M4 Max.

BenchmarkAdd-14 1000000000 0.2444 ns/opIf we look at the assembly of the benchmark function, we would see that the add function is not called at all.

go test -gcflags="-S" -run=^$ -bench=^$ . > file.s 2>&10x0000 00000 MOVD ZR, R1

0x0004 00004 JMP 12

0x0008 00008 ADD $1, R1, R1

0x000c 00012 MOVD 456(R0), R2

0x0010 00016 CMP R2, R1

0x0014 00020 BLT 8

0x0018 00024 RET (R30) Effectively, the loop body becomes empty, and we end up measuring the overhead of the loop structure itself, or worse, the compiler might optimize the loop entirely if it determines it has no observable effect.

The assembly output confirms our suspicion:

MOVD ZR, R1: Initializes the loop counteri(in registerR1) to zero. (ZRis zero register).JMP 12: Jumps to address 12 (0x000c).MOVD 456(R0), R2: Loadsb.NintoR2.CMP R2, R1: Comparesiwithb.N.BLT 8: Ifi < b.N, it jumps back to instruction 8, which isADD $1, R1, R1(the increment step of the loop).RET: Returns when done.

Crucially, the body of the loop, the call to add or even the addition instructions is entirely absent. We are merely measuring how fast the CPU can increment a counter and compare it to N.

We are no longer benchmarking add, we are just benchmarking the loop structure.

To fix this, we need to ensure the compiler cannot prove that the result is unused. A common technique is to assign the result to a package-level variable (a “sink”). This forces the compiler to perform the calculation because the sink variable is accessible outside the function’s scope, making the result “observable.”

var sink int

func BenchmarkAddFix(b *testing.B) {

for i := 0; i < b.N; i++ {

sink = add(20, 20)

}

}BenchmarkAddFix-14 1000000000 0.2497 ns/op0x0000 00000 MOVD ZR, R1

0x0004 00004 JMP 24

0x0008 00008 MOVD $40, R2

0x000c 00012 PCDATA $0, $-3

0x000c 00012 MOVD R2, test.sink(SB)

0x0014 00020 PCDATA $0, $-1

0x0014 00020 ADD $1, R1, R1

0x0018 00024 MOVD 456(R0), R2

0x001c 00028 CMP R2, R1

0x0020 00032 BLT 8

0x0024 00036 RET (R30)Now, the assembly looks different:

MOVD $40, R2: The compiler has still optimized the addition20 + 20into a constant40(constant folding), but it cannot discard it.MOVD R2, test.sink(SB): It moves that value40into the memory address of our globalsinkvariable.ADD $1, R1, R1: Increments the loop counter.

The loop now actually does some work: it performs the store to memory in every iteration. While the addition itself was folded (because add(20, 20) is constant), the operation of assigning to sink is preserved.

This benchmark ends up measuring the performance of a store instruction, not addition.

One might argue that the difference in the benchmark results is negligible (0.2497 ns/op vs 0.2444 ns/op). On a modern CPU like the Apple M4 Max (clocking between 4.0 GHz and 4.5 GHz, one hertz is one CPU cycle), a single cycle takes approximately 0.25 nanoseconds. Because these CPUs are superscalar, they can execute (multiple instructions) the loop overhead (increment + branch) and the “useful” work (store) in parallel within the same cycle. Effectively, both benchmarks measure the minimum latency of the loop structure itself (~1 cycle).

Conclusion? Not quite

At this point, we are no longer trying to measure the cost of integer addition. That question was already answered by the compiler: it folded the addition away because the arguments to add were constants.

What we are trying to understand now is something more subtle: why do two benchmarks that clearly execute different instructions still report almost the same time per operation?

Simply preventing the compiler from deleting our code (using a sink) wasn’t enough. We walked right into another trap: Instruction Level Parallelism. The sink assignment was independent enough that the CPU executed it largely in parallel with the loop bookkeeping(increment + branch). To truly measure an operation’s latency, we must prevent this parallelism.

To see a real difference, we need to introduce a dependency that prevents parallel execution of intructions.

var sink int

// Add a loop-carried dependency: each iteration depends on the previous one.

func BenchmarkDependency(b *testing.B) {

for i := 0; i < b.N; i++ {

sink += i

}

}In BenchmarkDependency, the operation sink += i creates a chain of dependencies:

- Iteration 1: Read

sink(0), Add0, Store0. - Iteration 2: Wait for Iteration 1 to finish. Read

sink(0), Add1, Store1. - Iteration 3: Wait for Iteration 2 to finish. Read

sink(1), Add2, Store3.

Because sink is a global variable, the compiler must assume it can be observed externally, which prevents it from keeping the value purely in registers. This introduces a bottleneck known as Store-to-Load Forwarding. The CPU must forward the data from the store buffer of the previous iteration to the load unit of the current iteration. On an M4 chip, this latency is approximately 4-5 clock cycles.

Expected time: 5 cycles * 0.25 ns/cycle ≈ 1.25 ns.

Actual result:

BenchmarkDependency-14 937794584 1.101 ns/opThis benchmark measures a loop-carried dependency and store-to-load latency.

I would like to take a little digression and explain Store-to-Load Forwarding.

The Physics of a Memory Write

When the assembly says MOVD R2, sink(SB), it does not mean “Write to the RAM stick on the motherboard” immediately. It means: “Commit this value to the coherent memory system.”

The Journey of a Write (Store)

-

CPU Core (Internal Queue-Store Buffer): The CPU puts the value (

sink=40) into a small, super-fast queue inside the core called the Store Buffer.- Time taken: Instant (<1 cycle).

- Purpose: The CPU can keep running without waiting for memory.

-

L1 Cache (The “Real” Memory View): Eventually (maybe 10-20 cycles later), the Store Buffer drains into the L1 Data Cache. This is the first place where other cores might see the change.

-

RAM (Main Memory): Much, much later (hundreds of cycles), if the L1 cache gets full, the value is evicted to L2, L3, and finally to RAM.

Why BenchmarkDependency is Slow (The “Forwarding” Penalty)?

In BenchmarkDependency (sink = sink + i), we do this in a tight loop:

- Iteration 1: writes

sink. Value goes into Store Buffer. - Iteration 2: needs to read

sink.- It checks the L1 Cache. The value isn’t there yet! (It’s still in the Store Buffer).

- The CPU must perform a trick called Store-to-Load Forwarding. It checks inside its own Store Buffer to find the pending write from Iteration 1.

The Penalty: Searching and retrieving data from this internal buffer is slower than reading unrelated data. It takes about 4–5 cycles on Apple Silicon (M-series).

What went wrong in a SIMPLE benchmark?

Across these three benchmarks, nothing changed in Go’s benchmarking framework. What changed was everything around it.

| Benchmark | What we thought we measured | What we actually measured |

|---|---|---|

BenchmarkAdd | Integer addition | Loop bookkeeping |

BenchmarkAddFix | Integer addition | Store throughput |

BenchmarkDependency | Integer addition | Store-to-load latency |

The framework faithfully executed each benchmark. The compiler and the CPU faithfully optimized the work we gave them.

The problem was not Go’s benchmarking framework, the problem was our mental model of what a benchmark measures.

A benchmark is NOT same as: Run this function

Ntimes and divide byN.

A benchmark is an experiment involving:

- the benchmark runner

- the compiler

- the CPU microarchitecture

- and how we structure observable effects

Even before looking at Go’s benchmarking code, an important pattern emerges:

The framework controls how often code runs, but the compiler and the CPU control what actually runs.

To understand what Go’s benchmarking framework does, and just as importantly, what it deliberately does not do, we need to look at how benchmarks are executed internally.

That’s where we go next.

Who Actually Controls a Go Benchmark?

When we write a benchmark like BenchmarkAdd, it is easy to imagine that Go simply runs the function in a tight loop and reports the time. That mental model is convenient and wrong.

In reality, the benchmark function is only one small part of a larger execution process controlled entirely by Go’s benchmarking framework. The key idea to keep in mind is this:

- The benchmark function provides work.

- The framework controls execution.

Let’s walk through what actually happens when you run go test -bench to see who is really in charge.

Discovery: Scanning for Candidates

The go test -bench tool begins by scanning compiled packages for functions that follow the convention: func BenchmarkX(b *testing.B). At this stage, the benchmark is just a function pointer, registered and waiting.

Context Initialization: More Than a Counter

Before running, the framework creates a pointer to testing.B. This object isn’t just a simple loop counter; it’s the control center. It holds b.N (benchmark iterations), tracks timing, records memory allocations, and stores metadata. Crucially, b.N starts undefined, and the framework decides how many times to run the benchmark by predicting iterations.

Iteration Selection: The Framework’s Choice

The benchmark function does not decide how many times to run. The framework repeatedly invokes the function with increasing values of b.N: 1, 100, 1000, etc., measuring how long it takes. It adjusts b.N dynamically until it finds a “stable window” of statistically significant execution time.

The Timing Trap: External Boundaries

The benchmark function runs inside the timing boundaries, but it does not create them. The framework starts the timer, calls the benchmark function, and stops the timer.

Calls like b.ResetTimer() are simply requests to the framework to adjust its internal clock; you are never measuring time yourself.

Summary: The Control Boundary

By now, a clear boundary should be visible:

- The Framework controls when, how often, and how long code runs.

- The Compiler and CPU control what instructions actually execute.

- The Author (you) controls which effects are observable.

Understanding this separation explains why our earlier benchmarks could run perfectly yet measure nothing but loop overhead.

Go’s Execution of Benchmarks

The execution of a benchmark function involves the following concepts:

- Discovery

- Running Root Benchmark

- Running User Benchmark

- Initial Verification: run1

- Benchmark Loop: run

- Predicting Iterations

- Result Collection

- High Precision Timing

1. Discovery

The go test -bench tool begins by scanning compiled packages for functions that follow the convention: func BenchmarkX(b *testing.B). Below function RunBenchmarks is called when go test -bench is run.

func RunBenchmarks(

matchString func(pat, str string) (bool, error),

benchmarks []InternalBenchmark,

) {

runBenchmarks("", matchString, benchmarks)

}

func runBenchmarks(

importPath string,

matchString func(pat, str string) (bool, error),

benchmarks []InternalBenchmark,

) bool {

// If no flag was specified, don't run benchmarks.

if len(*matchBenchmarks) == 0 {

return true

}

var bs []InternalBenchmark

for _, Benchmark := range benchmarks {

if _, matched, _ := bstate.match.fullName(nil, Benchmark.Name); matched {

bs = append(bs, Benchmark)

}

}

main := &B{

common: common{ ... },

importPath: importPath,

benchFunc: func(b *B) {

for _, Benchmark := range bs {

b.Run(Benchmark.Name, Benchmark.F)

}

},

benchTime: benchTime,

bstate: bstate,

}

main.runN(1)

return !main.failed

}The runBenchmarks function acts as the entry point for the entire benchmarking suite. It filters the registered benchmarks against the user-provided -bench flag (regex), creating a list of matching benchmarks. It then constructs a root testing.B object named main.

- Filtering: It filters registered benchmarks against the user-provided

-benchflag. - Main Benchmark: It constructs a root

testing.Bobject namedmain. This is special, it doesn’t measure performance itself but effectively serves as the parent container. - Execution: It kicks off execution by calling

main.runN(1). This starts the root benchmark, which in turn invokes specific benchmarks viab.Run.

Note: The benchFunc inside main is the closure responsible for iterating over and running all filtered benchmarks. InternalBenchmark contains benchmark name and its function.

2. Running Root Benchmark

As mentioned earlier, the execution starts with main.runN(1). This runN method is the core engine of the framework, responsible for setting up the environment and executing the benchmark function.

It starts by acquiring a global lock benchmarkLock. This ensures that only one benchmark runs at a time, preventing interference from other benchmarks.

func (b *B) runN(n int) {

benchmarkLock.Lock()

defer benchmarkLock.Unlock()

// Try to get a comparable environment for each run

// by clearing garbage from previous runs.

runtime.GC()

b.resetRaces()

b.N = n

b.loop.n = 0

b.loop.i = 0

b.loop.done = false

b.parallelism = 1

b.ResetTimer()

b.StartTimer()

b.benchFunc(b)

b.StopTimer()

b.previousN = n

b.previousDuration = b.duration

if b.loop.n > 0 && !b.loop.done && !b.failed {

b.Error("benchmark function returned without B.Loop() == false (break or return in loop?)")

}

}The runN method is where the framework actually measures execution time. It is the engine that drives every benchmark run.

Before starting the clock, it prepares the runtime environment to ensure consistent results:

runtime.GC(): It forces a full garbage collection. This ensures that memory allocated by previous benchmarks doesn’t affect the current one, providing a “clean slate.”b.resetRaces(): If you are running with-race, this resets the race detector’s state, preventing false positives or pollution from prior runs.b.N: The number of iterations the benchmark function should run.b.previousNandb.previousDuration: Stores the iteration count and duration of this run, which will be crucial for the later step: Predicting Iterations. The framework uses them to calculate the rate of the benchmark and estimate the nextN.

For the Root Benchmark (Main), n is always 1. Its benchFunc iterates over all the user benchmarks (like BenchmarkAdd) and calls b.Run on them.

It is important to note that runN is not specific to the main benchmark. It is the same method that will eventually run your benchmark code, which we will explore next.

3. Running User Benchmark

The Run method orchestrates the creation and execution of sub-benchmarks. When main iterates over all user benchmarks (e.g., BenchmarkAdd), it calls this method.

Key responsibilities:

- Release Lock: It releases

benchmarkLockbecause sub-benchmarks will run as independent entities and may need to acquire it themselves. - Creation of testing.B: Creates a new

testing.Bcontext (sub) for the user benchmark. - Execution: Calls

sub.run1()for doing the first iteration andsub.run()for doing the rest of the iterations.

func (b *B) Run(name string, f func(b *B)) bool {

b.hasSub.Store(true)

benchmarkLock.Unlock()

defer benchmarkLock.Lock()

benchName, ok, partial := b.name, true, false

if b.bstate != nil {

benchName, ok, partial = b.bstate.match.fullName(&b.common, name)

}

if !ok {

return true

}

var pc [maxStackLen]uintptr

n := runtime.Callers(2, pc[:])

sub := &B{

common: common{ ... },

importPath: b.importPath,

benchFunc: f,

benchTime: b.benchTime,

bstate: b.bstate,

}

if sub.run1() {

sub.run()

}

b.add(sub.result)

return !sub.failed

}4. Initial Verification: run1

Before the framework attempts to run any benchmark millions of times, it runs it exactly once via run1().

It serves two critical purposes:

- Correctness Check: It ensures the benchmark function doesn’t panic or fail immediately.

- Discovery: It provides the first data point. If

run1takes 1 second, there is no need to ramp up toN=1,000,000.n=1is already enough.

It launches a separate goroutine to execute runN(1). This isolation allows the framework to handle panics or FailNow calls gracefully via the signal channel.

func (b *B) run1() bool {

go func() {

defer func() {

b.signal <- true

}()

b.runN(1)

}()

<-b.signal

if b.failed {

fmt.Fprintf(b.w, "%s--- FAIL: %s\n%s", b.chatty.prefix(), b.name, b.output)

return false

}

b.mu.RLock()

finished := b.finished

b.mu.RUnlock()

if b.hasSub.Load() || finished {

return false

}

return true

}5. Benchmark Loop: run

After run1 passes, the framework proceeds to the main execution loop.

b.bstate != nil: Checks if we are running in the standard mode viago test -bench. In this mode,bstateholds the benchmark state and configuration. TheprocessBenchfunction handles properties like-cpuand-countloops, ultimately callingdoBenchfor each combination.b.launch(): Likerun1,launchalso runs in a separate goroutine managed bydoBench.b.loop.n == 0: Ensures we haven’t already run the loop logic (idempotency check).b.benchTime.n > 0: Handles the case where the user specified a fixed iteration count (e.g.,-benchtime=100x).- If so, it runs exactly that many times.

- Otherwise, it enters the dynamic ramp-up loop where it uses

predictNto predict next iteration count untilb.durationmeets the target bench time (d).

b.runN(n): Finally,b.runN(n)is invoked to execute the benchmark for the calculated number of iterations. Here,b.Nis set to the calculated iterations, which is used by the user benchmark function.

func (b *B) run() {

if b.bstate != nil {

// Running go test --test.bench

b.bstate.processBench(b) // Must call doBench.

} else {

// Running func Benchmark.

b.doBench()

}

}

func (b *B) doBench() BenchmarkResult {

go b.launch()

<-b.signal

return b.result

}

func (b *B) launch() {

defer func() {

b.signal <- true

}()

if b.loop.n == 0 {

if b.benchTime.n > 0 {

if b.benchTime.n > 1 {

b.runN(b.benchTime.n)

}

} else {

d := b.benchTime.d

for n := int64(1); !b.failed && b.duration < d && n < 1e9; {

last := n

// Predict required iterations.

goalns := d.Nanoseconds()

prevIters := int64(b.N)

n = int64(predictN(goalns, prevIters, b.duration.Nanoseconds(), last))

b.runN(int(n))

}

}

}

b.result = BenchmarkResult{b.N, b.duration, b.bytes, b.netAllocs, b.netBytes, b.extra}

}6. Predicting Iterations

This function is the heart of the “execution phase.” It decides how many times to run the benchmark next.

func predictN(goalns int64, prevIters int64, prevns int64, last int64) int {

if prevns == 0 {

// Round up to dodge divide by zero. See https://go.dev/issue/70709.

prevns = 1

}

n := goalns * prevIters / prevns

n += n / 5

n = min(n, 100*last)

n = max(n, last+1)

n = min(n, 1e9)

return int(n)

}- Linear Extrapolation:

n := goalns * prevIters / prevns. It starts with a simple math. Number of iterations should be equal tobenchmark duration * time per iteration. If 1 iteration took 1ms, and our goal is 1000ms (goalns), we need 1000 iterations. - The 20% Pad:

n += n / 5. Go deliberately overshoots the target by 20%. Why? To avoid “undershooting.” If we only run exactly as many as we predicted, and the CPU throttles slightly or we get lucky with cache hits, we might finish just under the target time, forcing yet another ramp-up round. Overshooting slightly ensures we cross the finish line in fewer steps. - Growth Cap:

n = min(n, 100*last). It prevents the iteration count from exploding too fast (e.g., if the previous run was suspiciously fast due to noise/setup). - Monotonicity:

n = max(n, last+1). We must always run at least one more iteration than before. - Safety Cap:

n = min(n, 1e9). It caps the max iterations to 1 billion. This avoids potential integer overflows on 32-bit systems (whereintmight be 32-bit) and ensures that we don’t accidentally schedule a run that takes days.

This prediction loop continues until b.Duration >= benchtime.

7. Result Collection

Once the stable iteration count is found and the benchmark completes, the framework captures the final statistics. The BenchmarkResult struct holds the “scorecard” for the run: the total iterations (N) and the total elapsed time (T) + other fields. These values are later used to compute the ns/op metric.

func (b *B) launch() {

defer func() {

b.signal <- true

}()

if b.loop.n == 0 {

if b.benchTime.n > 0 {

if b.benchTime.n > 1 {

b.runN(b.benchTime.n)

}

} else {

d := b.benchTime.d

for n := int64(1); !b.failed && b.duration < d && n < 1e9; {

last := n

// Predict required iterations.

goalns := d.Nanoseconds()

prevIters := int64(b.N)

n = int64(predictN(goalns, prevIters, b.duration.Nanoseconds(), last))

b.runN(int(n))

}

}

}

b.result = BenchmarkResult{b.N, b.duration, b.bytes, b.netAllocs, b.netBytes, b.extra}

}

type BenchmarkResult struct {

N int // The number of iterations.

T time.Duration // The total time taken.

//...

}8. High Precision Timing

Benchmarking code that runs in nanoseconds requires a clock with nanosecond precision.

- Linux/Mac: Since Go 1.9,

time.Now()includes a monotonic clock with nanosecond precision (viaruntime.nanotime()to get that monotonic clock reading with nanosecond precision.). So, callingtime.Now()is effectively high precision on these platforms. - Windows: The standard system timer on Windows historically had low resolution (~1-15ms). To guarantee accuracy across all platforms, Go’s benchmarking framework explicitly abstracts this, using

QueryPerformanceCounter(QPC) on Windows to bypass the system clock limitations.

func (b *B) runN(n int) {

b.StartTimer()

b.benchFunc(b)

b.StopTimer()

}

func (b *B) StartTimer() {

if !b.timerOn {

b.start = highPrecisionTimeNow()

}

}

func (b *B) StopTimer() {

if b.timerOn {

b.duration += highPrecisionTimeSince(b.start)

}

}

//go:build !windows

func highPrecisionTimeSince(b highPrecisionTime) time.Duration {

return time.Since(b.now)

}

//go:build windows

func highPrecisionTimeNow() highPrecisionTime {

var t highPrecisionTime

// This should always succeed for Windows XP and above.

t.now = windows.QueryPerformanceCounter()

return t

}Building Blocks of a Benchmarking Framework

By stepping through Go’s benchmarking implementation, a clear pattern emerges. Go’s framework is not a collection of ad-hoc features, it is an explicit encoding of a benchmarking model. At a high level, any serious benchmarking framework needs the following building blocks.

1. Definition

A benchmark begins as a user-defined unit of work. In Go, this is the BenchmarkX(b *testing.B) function. Crucially, this function does not control execution, it only defines what work should be performed.

2. Setup and teardown boundaries

A framework must distinguish between:

- work that should be measured

- work that should not

Go does this by placing timing boundaries outside the benchmark function and exposing explicit controls (ResetTimer, StopTimer, StartTimer) to the author.

This allows setup and cleanup to exist without contaminating measurements.

3. Iteration discovery (incidental warmup)

Go does not include an explicit warm-up phase.

Instead, it repeatedly executes the benchmark while discovering an appropriate iteration count. During this process, the benchmark naturally benefits from incidental warm-up effects such as cache population, branch predictor training, and CPU frequency stabilization.

These effects are not guaranteed or modeled explicitly, they are a byproduct of repeated execution, not a contract of the framework.

4. Iteration prediction

A benchmark framework must answer a hard question:

“How much work is enough to measure?”

Go answers this dynamically by:

- observing early runs

- extrapolating iteration counts

- overshooting slightly to avoid undersampling

This avoids both noisy micro-measurements and unnecessarily long runs.

5. High-precision timing

At nanosecond scales, clock choice matters.

Go abstracts platform-specific timing differences behind a consistent high-precision clock, ensuring that measurements are comparable across operating systems.

6. Result aggregation and reporting

Raw measurements are not useful on their own.

The framework is responsible for:

- aggregating results

- normalizing per operation

- presenting consistent metrics

This keeps benchmark code simple and focused.

7. DoNotOptimize

Some benchmarking frameworks, most notably Google Benchmark, provide an explicit utility called DoNotOptimize. Its purpose is not to disable compiler optimizations globally, but to prevent the compiler from discarding or reordering a specific computation that the benchmark author intends to measure.

In C++, this is typically implemented using an empty inline assembly block with carefully chosen constraints:

Here is a tiny pseudo code snippet to explain how DoNotOptimize will be used:

func BenchmarkToSumFirst100(b *testing.B) {

for i := 0; i < b.N; i++ {

result := 0

for count := 1; count <= 100; count++ {

result += count

}

DoNotOptimize(result) // Forces 'result' to be computed

}

}Note: DoNotOptimize is not a real function in Go, it is a placeholder for any observable side effect that the compiler must preserve.

DoNotOptimize in C++, this is typically implemented using an empty inline assembly block with carefully chosen constraints:

asm volatile(

"" // no actual instructions

:

: "r"(value) // value must be computed and placed in a register

: "memory" // assume arbitrary memory may be read or written

);At first glance, this code appears to do nothing. The power of this construct lies entirely in how the compiler is forced to reason about it.

The volatile qualifier ensures that the assembly block cannot be removed or reordered, even though it emits no instructions. Without volatile, the compiler could safely delete the entire block as dead code.

The "r"(value) input constraint tells the compiler that the assembly block uses value. This makes the value observable: it must be computed, materialized in a register, and available at this precise point in the program. As a result, the compiler is forced to calculate the value, preventing it from optimizing the code away or moving it outside the loops.

The "memory" clobber introduces a compiler-level memory barrier. It informs the compiler that the assembly block may read or write arbitrary memory, preventing it from reordering loads and stores across this point. This is not a CPU fence, it does not emit hardware memory-ordering instructions, but it is a strong optimization barrier at compile time.

Together, these constraints create a powerful illusion: the compiler must assume the value matters, must preserve its computation, and must respect ordering, yet the generated machine code remains minimal or even empty. This makes DoNotOptimize ideal for benchmarking, it preserves the work without polluting the measurement with extra instructions.

Go deliberately avoids providing such a facility. Without inline assembly or compiler barriers in user code, Go requires observability to be expressed through language semantics, such as global variables or visible side effects, rather than compiler-specific escape hatches. This keeps the benchmarking framework portable and minimal, at the cost of requiring deeper awareness from the benchmark author.

8. Explicit non-goals

Equally important are the things a benchmarking framework does not do.

Go’s framework does not:

- prevent compiler optimizations

- understand CPU microarchitecture

- infer programmer intent

Those responsibilities belong to the benchmark author. Understanding these boundaries is what separates a reliable benchmark from a misleading one.

Summary

Go’s benchmarking framework is often treated as a convenience feature, a tight loop with a timer attached. In reality, it is a carefully designed execution engine that orchestrates controlled performance experiments.

By reading through the internals, we saw that:

- benchmarks can look correct and still measure nothing

- compiler optimizations and CPU behavior matter as much as framework mechanics

- the framework controls execution, not meaning

Understanding these internals changes how you write and interpret benchmarks.

What you can do after understanding the internals

-

Reason about

b.Ninstead of fighting it

b.Nis not an input; it is a value discovered by the framework. Expect it to vary, and design benchmarks whose work can be meaningfully amortized over many iterations. -

Confirm that benchmark code is actually executed

Dead-code elimination, constant folding, and instruction-level parallelism can silently erase the work you think you are measuring. If you cannot explain why the compiler must execute your code, the benchmark result is meaningless. -

Use

DoNotOptimize(or its Go equivalents) correctly

DoNotOptimizepreserves observability, not intent. It prevents the compiler from discarding values, but it does not defeat instruction-level parallelism or isolate CPU costs. In Go, this responsibility is expressed through language-level side effects rather than compiler escape hatches. -

Diagnose surprising results instead of guessing

When a benchmark reports implausible numbers, you can reason about iteration discovery, compiler behavior, and microarchitectural effects instead of relying on trial and error.